A microfluidic panel lets users push buttons on a flat interface.

Touchscreens are attention hogs--you've simply got to look at them each time you want to type a letter. So the folks at Tactus Technology came up with a tactile layer that lets real physical buttons morph out of a transparent touchscreen surface when needed, to disappear back into the screen when done.The idea of a deformable touchscreen surface came to Craig Ciesla, CEO of Tactus, way back in 2007, when he found himself using his BlackBerry instead of the newly released iPhone because of its keyboard. That set him thinking as to whether it might be possible to somehow marry a physical tactile surface with a flat touchscreen. Could you have any type of keyboard or interface you wanted rise and fall out of a touchscreen on-demand, to get the best of both worlds?

Currently every interface designer has to find some way to handle two very fundamental challenges in touchscreen technology: how to know where your fingers are on a completely flat surface (orientation) and when you've actually done something (confirmation). Almost all of the solutions out there employ haptics or auditory cues to let the user know when they've triggered an input, but nothing can really deal with positioning. This is where the layer really shines, according to its developers. "We are truly physical deformation, you know where you are, you can feel the edges and the positions of the keys," says Nate Saal, VP Business Development at Tactus.

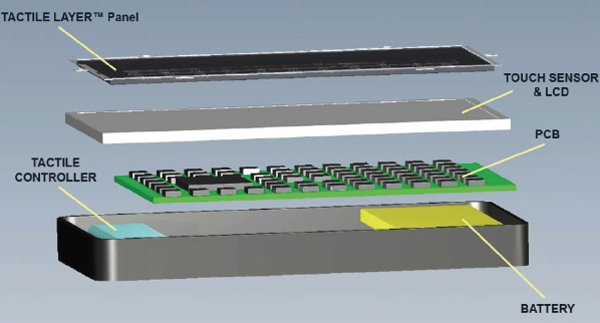

Once the layer morphs to create the buttons they remain stable and you can type on them for seconds or hours. Triggered to rise or fall by a proximity sensor or software, button shape, size, height, and firmness can be very minutely controlled. It's even possible to pre-configure multiple button sets in the manufacturing process and have different button sets rise according to the users' interface needs.

Saal is keen to point out though that it's way more than a cool idea. "We are not a keyboard technology, nor a button technology, we are a user interface technology where people can take our technology and create whatever kind of interface they want," Saal explains "It could be buttons, it could be guidelines, it could be any sort of shape or construct on that surface."

Think gridlines rising out of the backside of a device on a door or an interface curved all around a steering wheel that pops up ring shapes when you need to change the volume on your car's music player. "Having this technology in my pocket or in my bag is cool, but what if it was also on the side of a building?

What happens if the entire glass facade of a building is one giant touchscreen that can physically change?" asks Brian Crooks, Associate Creative Director at And Partners. "Designers will definitely have a lot of fun investigating and playing with all the possibilities this brings to the table," he adds.

The immediate benefits are being able to touchtype much faster than the 25 words per minute speed possible with a touchscreen keyboard and being able to do far more on the go with existing devices. "Today's mobile devices are tremendously capable computers and the reason we do not use them like desktop computers or laptops is because the human-computer interface is contained," says Chris Harrison, a Carnegie Mellon University researcher who developed a pneumatically controlled tactile layer a few years ago in the laboratory.

The immediate benefits are being able to touchtype much faster than the 25 words per minute speed possible with a touchscreen keyboard and being able to do far more on the go with existing devices. "Today's mobile devices are tremendously capable computers and the reason we do not use them like desktop computers or laptops is because the human-computer interface is contained," says Chris Harrison, a Carnegie Mellon University researcher who developed a pneumatically controlled tactile layer a few years ago in the laboratory.

Sharon Rosenblatt of Accessibility Partners, a company that tests for compliance and use of innovative technology by people with disabilities, believes that it would be a tremendous step in the right direction if touchscreens could truly become tactile. At present, accessibility is a huge issue for the visually challenged, the elderly, and those suffering from muscle or joint disorders. Using an increasing number of touchscreen-enabled devices, from ATM's to microwaves and mobile phones, is a constant struggle.

Tactus expects to start shipping their layer in mid 2013 to manufacturers. Currently the technology is on display at SID, the Society for Information Display in Boston. Considering how many technologies exclude many of our senses, the idea of working to incorporate more sense related information into the next generation of user interfaces is exciting news for some. "While our sight isn't that of an eagle, and our smell not that of a dog, and our hearing not a bat's, our sense of touch may be our single most sensitive input method, and it is therefore logical that in a world of touch interfaces, we would strive to bring back some of the tactility we've lost compared to traditional hardware," says Michael Fienen, Senior Interactive Developer at Aquent.

Saal is keen to point out though that it's way more than a cool idea. "We are not a keyboard technology, nor a button technology, we are a user interface technology where people can take our technology and create whatever kind of interface they want," Saal explains "It could be buttons, it could be guidelines, it could be any sort of shape or construct on that surface."

Think gridlines rising out of the backside of a device on a door or an interface curved all around a steering wheel that pops up ring shapes when you need to change the volume on your car's music player. "Having this technology in my pocket or in my bag is cool, but what if it was also on the side of a building?

What happens if the entire glass facade of a building is one giant touchscreen that can physically change?" asks Brian Crooks, Associate Creative Director at And Partners. "Designers will definitely have a lot of fun investigating and playing with all the possibilities this brings to the table," he adds.

The immediate benefits are being able to touchtype much faster than the 25 words per minute speed possible with a touchscreen keyboard and being able to do far more on the go with existing devices. "Today's mobile devices are tremendously capable computers and the reason we do not use them like desktop computers or laptops is because the human-computer interface is contained," says Chris Harrison, a Carnegie Mellon University researcher who developed a pneumatically controlled tactile layer a few years ago in the laboratory.

The immediate benefits are being able to touchtype much faster than the 25 words per minute speed possible with a touchscreen keyboard and being able to do far more on the go with existing devices. "Today's mobile devices are tremendously capable computers and the reason we do not use them like desktop computers or laptops is because the human-computer interface is contained," says Chris Harrison, a Carnegie Mellon University researcher who developed a pneumatically controlled tactile layer a few years ago in the laboratory.Sharon Rosenblatt of Accessibility Partners, a company that tests for compliance and use of innovative technology by people with disabilities, believes that it would be a tremendous step in the right direction if touchscreens could truly become tactile. At present, accessibility is a huge issue for the visually challenged, the elderly, and those suffering from muscle or joint disorders. Using an increasing number of touchscreen-enabled devices, from ATM's to microwaves and mobile phones, is a constant struggle.

Tactus expects to start shipping their layer in mid 2013 to manufacturers. Currently the technology is on display at SID, the Society for Information Display in Boston. Considering how many technologies exclude many of our senses, the idea of working to incorporate more sense related information into the next generation of user interfaces is exciting news for some. "While our sight isn't that of an eagle, and our smell not that of a dog, and our hearing not a bat's, our sense of touch may be our single most sensitive input method, and it is therefore logical that in a world of touch interfaces, we would strive to bring back some of the tactility we've lost compared to traditional hardware," says Michael Fienen, Senior Interactive Developer at Aquent.

0 Comments::

Post a Comment

Hope you enjoyed :)